Ext JS has a useful object creation pattern. Most constructors can be passed a hash of configuration parameters. This can be used instead of the prototype creation pattern (not to be confused with Javascript's prototypical inheritance). Rather than create new objects that are copies of an existing object, you create objects based on a set of configuration data.

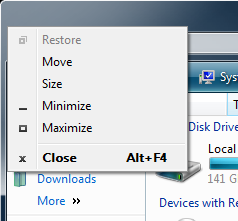

You want an example? OK. Today, I was creating context menus for items in a tree. There is a global pool of possible actions, and each node responds to a different subset of them. When the user right-clicks on a node, I need to

- Create a Menu instance

- Add all of the appropriate menu items to it

- Show the menu

var actionMenuMap = {

addChild: new Ext.menu.Item({text:"Add child", icon:"add.png"}),

delete: new Ext.menu.Item({text:"Delete", icon:"delete.png"}),

fireZeMissiles: new Ext.menu.Item({text:"Fire ze Missiles!", icon:"fire.png"})

}

var nodeActions = ["addChild", "delete"]; //in practice, this would come from the node itself

//dangerous nesting ahead!

new Menu({

items: nodeActions.map(function(a) {

return actionMenuMap[a];

})

}).showAt(event.getXY());That didn't work - it seemed like I couldn't share a menu item instance between menu instances. I might be able to get it to work by removing menu items after the menu is dismissed, but I don't actually need to. I can simply hold on to the configuration information.

var actionMenuMap = {

addChild: {text:"Add child", icon:"add.png"},

delete: {text:"Delete", icon:"delete.png"},

fireZeMissiles: {text:"Fire ze Missiles!", icon:"fire.png"}

}

var nodeActions = ["addChild", "delete"]; //in practice, this would come from the node itself

//now we're cooking with functional programming!

new Menu({

items: nodeActions.map(function(a) {

return new Ext.menu.Item(actionMenuMap[a]);

})

}).showAt(event.getXY());In case you are not familiar, map is a function that is present in every functional language and many dynamic languages. It does not exist in Javascript natively, but is added by Prototype, jQuery, dojo, Mochikit, and probably every other Javascript framework. Here's a sample implementation for reference:

Array.prototype.map = function(f) {

if (typeof(f) !== "function") {

throw new Error("map takes a function");

}

var result = new Array(this.length);

for (var i = 0; i < this.length; ++i) {

result[i] = f(this[i]);

}

return result;

}What could possibly make this better? In addition to taking a hash, allow the constructor to take a function. That function manipulates the object after the rest of the construction runs, allowing you to add children or manipulate settings or calculate values. This would be pure icing, of course. Factory functions also feel a lot more lightweight to me than factory objects. See also Rails' version of the K combinator.

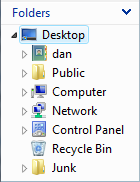

Can you believe that it's easy to delete the wrong file?

Can you believe that it's easy to delete the wrong file?